Xbox Kinect Launch

2008-2010

I was Xbox dashboard's interaction architect, partnering deeply with program management, developers, user research, and visual / motion design through an intense 2 year program to conceptualize, prototype, test, and refine gesture and speech interaction possibilities.

GOALS:

- Launch an updated, NUI capable Xbox 360 dashboard for fall 2010

- Push and uncover Kinect’s capabilities and design to its strengths

- Create new 3D air-gesture and speech interaction paradigms

- Explore different possible dashboard UIs accommodating all forms of input (remote control, controller, air-gesture, and speech)

- Document and protect intellectual property

ACTIONS:

- Defined and evolved a set of NUI design principles

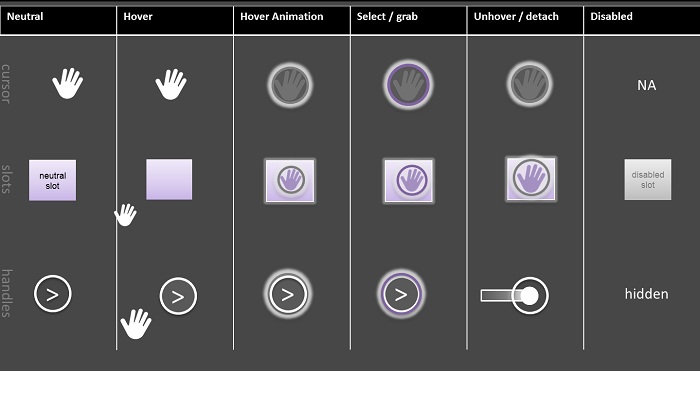

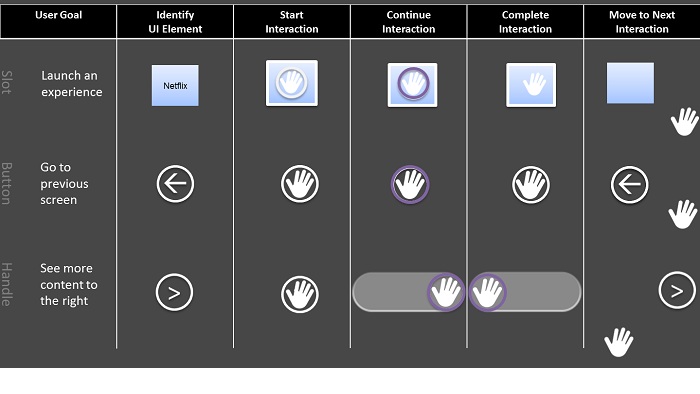

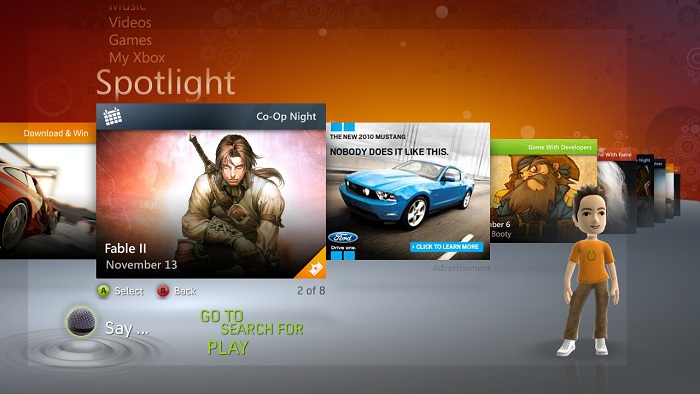

- Identified all core UI interactions, then explored corresponding gesture and speech interaction sets

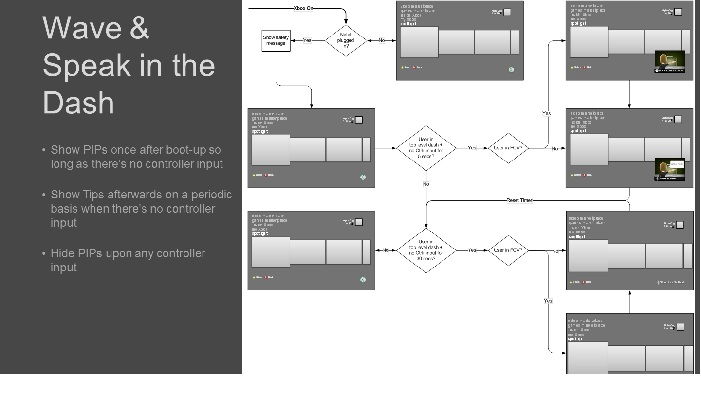

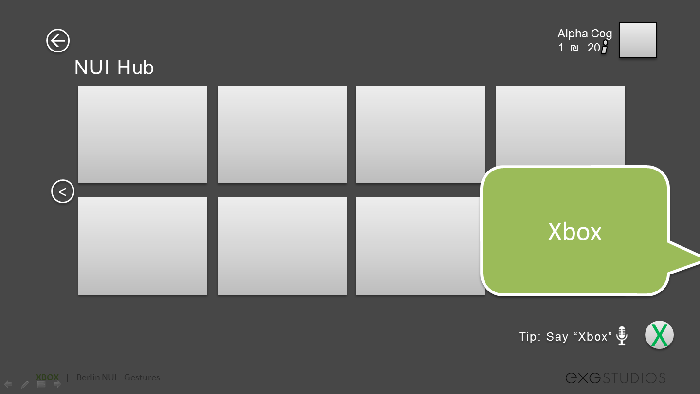

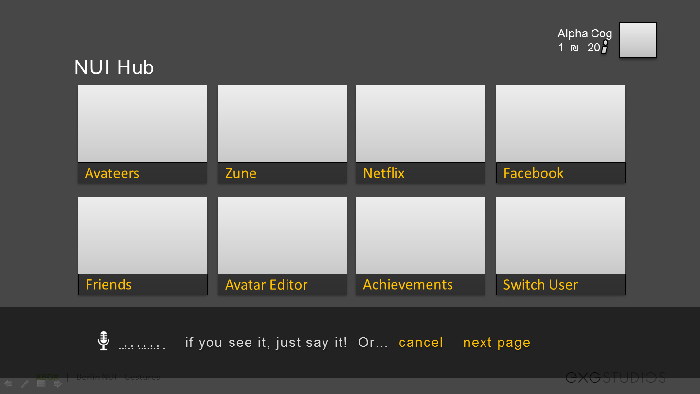

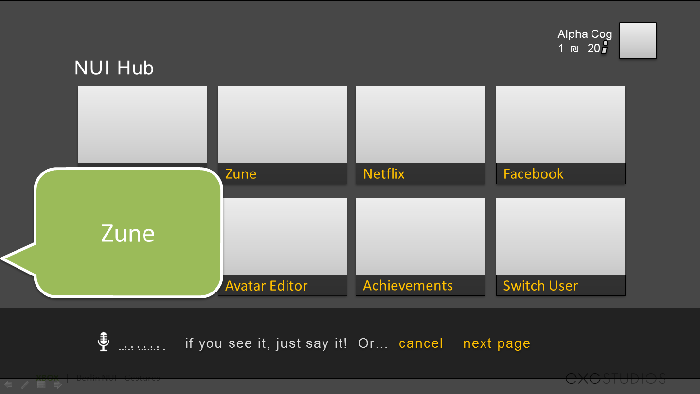

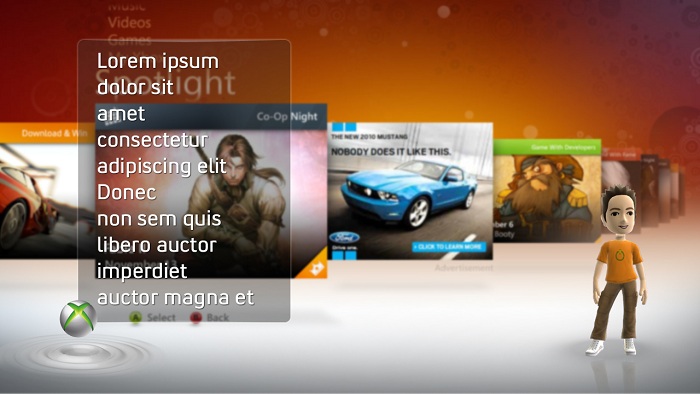

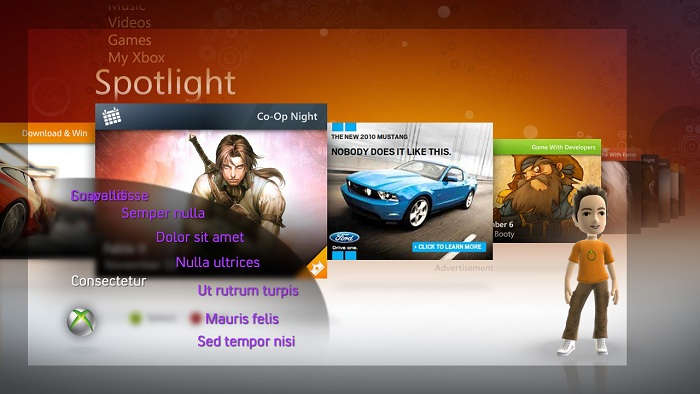

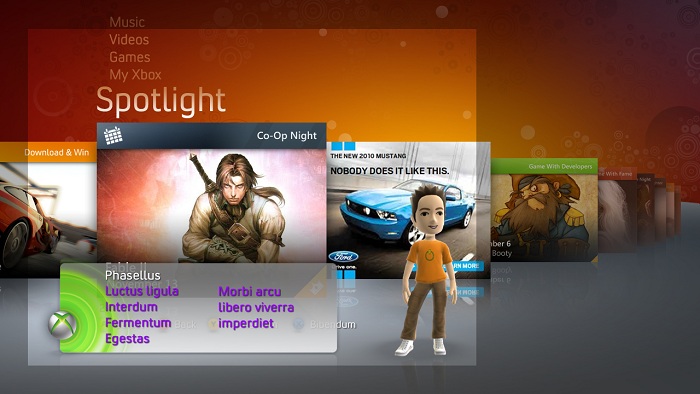

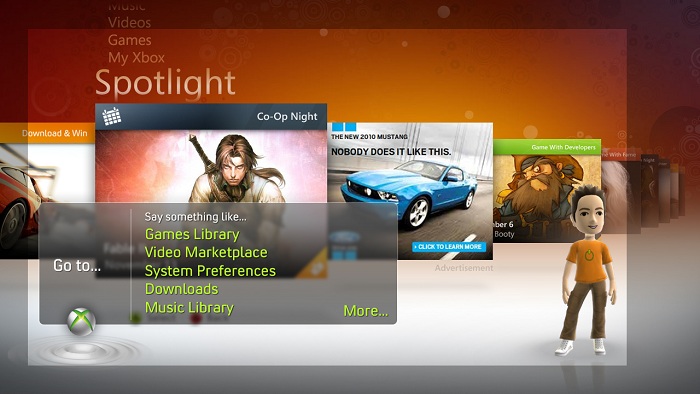

- Explored speech interaction paradigm, syntax, vocabulary, and UI

- Partnered with program managers, design research and developers to prototype, test, refine, and reject numerous interaction models and controls

- Partnered with various game studios to identify and define standard gestures

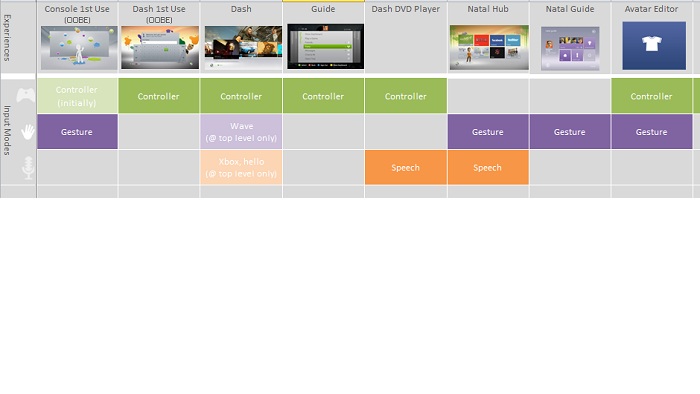

- Explored and defined how users would engage, disengage, usurp control, and switch between input modalities

- Collaborated with design and engineering teams to explore 3 dashboard UIs:

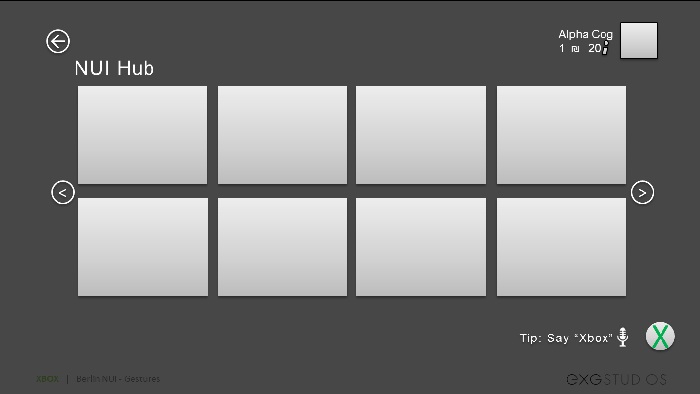

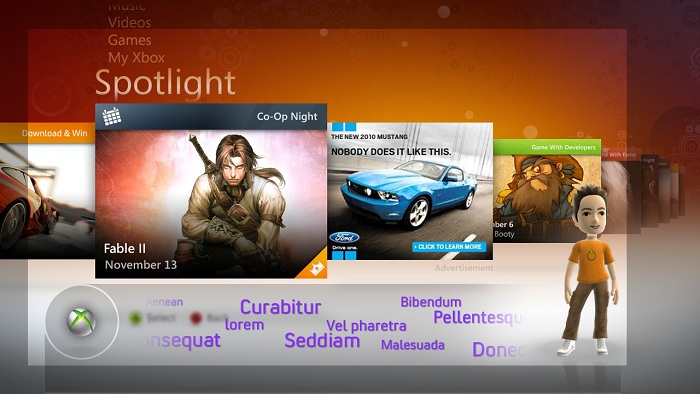

- Adapted: no changes, only minimal additions to accommodate gesture and speech (i.e., NUIcide)

- Evolutionary: some modifications, updating common controls

- Revolutionary: new designs from scratch

- Identified inconsistency in NUI availability across the system and pushed for end-user status communication

- Scripted, storyboarded, and partnered with developers to deliver user education

RESULTS:

- Xbox’s fall 2010 release with Kinect set a world record for fastest selling consumer electronic product in history; most reviews were positive, especially regarding the game-changing potential

- Established Xbox’s first natural user interaction paradigms

- Gesture: Created the engagement, targeting and selection model

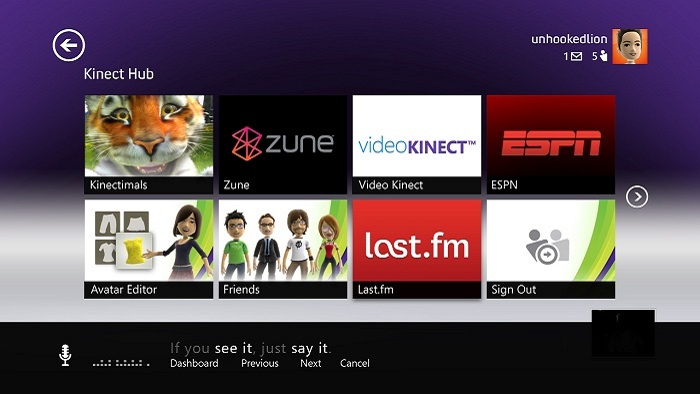

- Speech: Created “See-it, Say-it” and “Know-it, Say-it” interaction model

- Partnered across teams and disciplines to deliver final gesture and speech interaction UI

- Established a solid foundation on which to evolve and launch future releases

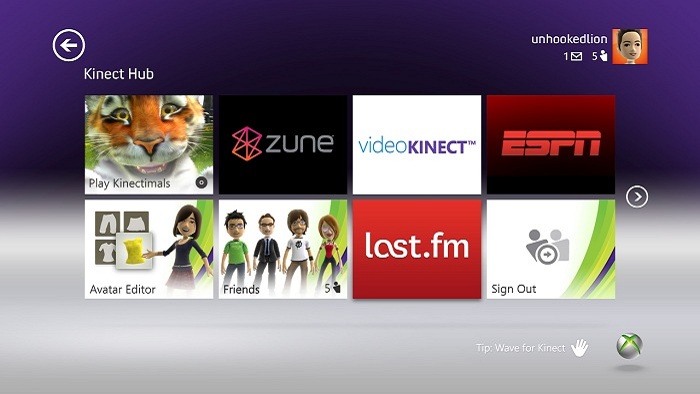

- The original dashboard was not NUI enabled, instead a new area just for Kinect was added; total redesign of the dashboard to fully NUI enable it would occur in a subsequent 2011 release

- Authored and co-authored patent applications:

- 10+ utility patent awards (US patent pending) for Xbox Kinect gesture and speech related interaction design solutions

- 7 design patent awards for Xbox gesture and speech related visual design solutions

- Evangelized gesture design learnings across the company and at various conferences